摘要

本文内容转自网络,个人学习记录使用,请勿传播

为什么需要存储卷

容器中的文件在磁盘上是临时存放的,这给容器中运行比较重要的应用程序带来一些问题。

- 当容器异常崩溃退出时,kubelet会重建容器,容器中的文件会丢失

- 一个pod中运行多个容器可能需要共享文件

Kubernets的卷(Volume)可以解决上述问题

数据卷概述

- 节点本地数据卷:

hostPath、emptyDir - 网络卷:

NFS、Ceph、FlusterFS - 公有云:

AWS、EBS - K8S资源:

configmap、secret

临时数据卷、节点数据卷、网络数据卷

emptyDir

emptyDir是一个临时存储卷,与Pod生命周期绑定在一起,如果Pod删除了卷也会被删除

- 应用场景:Pod中容器之间数据共享

1 | apiVersion: apps/v1 |

验证

1 | $ kubectl apply -f emptyDir.yaml |

emptydir工作目录:

/var/lib/kubelet/pods/<pod-id>/volumes/kubernetes.io~empty-dir通过docker ps命令可以获取pod-id

hostPath

将pod所在的node节点上的文件系统上的文件或目录挂载到pod的容器中。

- 应用场景:pod中的容器需要访问宿主机文件或容器中一些文件需要在宿主机留存

- 可以将宿主机任意文件或目录挂载到容器中

- 可以通过目录挂载对宿主机进程等信息进行监控

1 | apiVersion: apps/v1 |

网络数据卷nfs

提供对nfs挂载支持,可以自动将nfs共享路径挂载到Pod中

官方volume文档,支持很多种网络卷https://kubernetes.io/zh/docs/concepts/storage/volumes/

安装nfs

1 | $ yum install nfs-utils -y |

资源清单

1 | apiVersion: apps/v1 |

验证

1 | $ kubectl get pod -l app=nginx-nfs -w |

持久数据卷概述

PersistentVolume(PV):对存储资源创建和使用的抽象,将存储抽象成k8s集群中的资源进行管理PersistentVolumeClaim(PVC):让用户不需要关心具体的Volume实现细节,不用关心卷的实现

k8s集群将存储抽象成PV,通过PV来创建PVC,Pod申请PVC作为卷来使用时,k8s集群将PVC挂载到Pod中

PV和PVC使用流程

- 创建pvc时,k8s集群会自动去匹配pv,从合适的pv中创建pvc

资源清单

1 | # 使用nfs存储创建pv |

验证

1 | $ kubectl get pv,pvc |

pv和pvc的关系

- pv和pvc是一对一的对应关系

- 一个deploy可以使用多个卷和pvc

pv和pvc的匹配模式

存储容量

- 匹配容量最接近的pv

- 如果无法满足则处于pending状态

- 容量不是用于限制,只是一种标记方式,pv的存储容量是由实际的存储后端决定的

访问模式

PV生命周期

访问模式AccessModes

AccessModes 是用来对 PV 进行访问模式的设置,用于描述用户应用对存储资源的访问权限,访问权限包括下面几种方式:

ReadWriteOnce(RWO):读写权限,但是只能被单个节点挂载ReadOnlyMany(ROX):只读权限,可以被多个节点挂载ReadWriteMany(RWX):读写权限,可以被多个节点挂载

回收策略RECLAIM POLICY

目前 PV 支持的策略有三种:

Retain(保留): 保留数据,需要管理员手工清理数据Recycle(回收):清除 PV 中的数据,效果相当于执行rm -rf /ifs/kuberneres/*Delete(删除):与 PV 相连的后端存储同时删除

PV状态STATUS

一个 PV 的生命周期中,可能会处于4中不同的阶段:

Available(可用):表示可用状态,还未被任何 PVC 绑定Bound(已绑定):表示 PV 已经被 PVC 绑定Released(已释放):PVC 被删除,但是资源还未被集群重新声明Failed(失败): 表示该 PV 的自动回收失败

静态供给

在项目部署前,提前创建一些pv的使用方式叫做静态供给,缺点是维护成本太高了,通常可以使用pv动态供给的方式来提升pv管理效率

如使用nfs这类存储作为pv,需要管理员提权创建多个目录用于不同pv

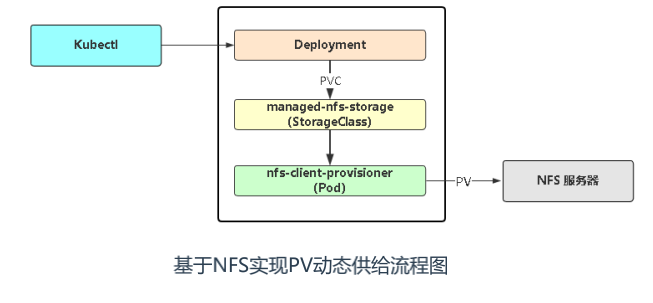

PV动态供给StorageClass

使用pv动态供给的方式,每次创建pvc无需再提前创建pv

基于nfs的动态供给

K8s默认不支持NFS动态供给,需要单独部署社区开发的插件。

项目地址:https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner

1 | $ wget https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner/archive/refs/tags/nfs-subdir-external-provisioner-4.0.14.tar.gz |

资源清单

1 | apiVersion: v1 |

有状态应用部署:StatefulSet 工作负载均衡器

无状态和有状态

Deployment控制器设计原则:管理的所有Pod一模一样,提供同一个服务,也不考虑在哪台Node运行,可随意扩容和缩容。这种应用称为“无状态”,例如Web服务。

在实际的场景中,这并不能满足所有应用,尤其是分布式应用,会部署多个实例,这些实例之间往往有依赖关系,例如主从关系、主备关系,这种应用称为”有状态”,例如MySQL主从、Etcd集群。

- 无状态:所有pod都是相同的状态

- 有状态:pod之间存在一些差异,不对等关系

deployment 无状态应用

daemonset 守护进程应用

statefulSet 有状态应用

StatefulSet

- 部署有状态的应用

- 解决Pod独立生命周期,保持Pod启动顺序和唯一性

- 稳定:唯一的网络标识、持久存储

- 有序:优雅的部署和扩展、删除、终止和滚动更新

- 应用场景:分部署应用、数据库集群

参考文档:https://kubernetes.io/docs/concepts/workloads/controllers/statefulset/

稳定的网络ID

1 | 使用Headless Service(相比普通Service只是将spec.clusterIP定义为None)来维护Pod网络身份。 |

稳定的存储

1 | StatefulSet的存储卷使用VolumeClaimTemplate创建,称为卷申请模板,当StatefulSet使用 |

资源清单

1 | # cat nginx.yaml |

1 | # 使用动态供给的存储 |

- StatefulSet与Deployment的区别:sts是有身份的

- 身份的三要素:

- 域名

- 主机名

- 存储(PVC)

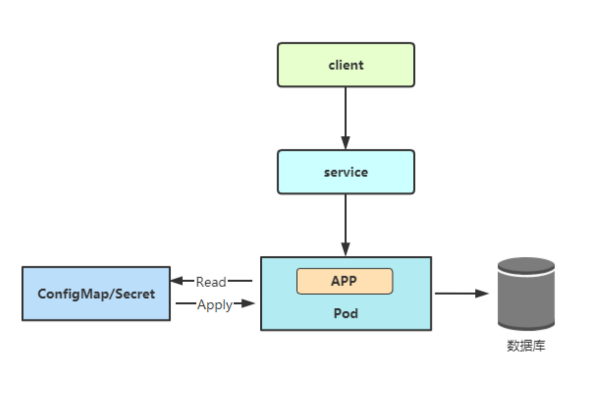

应用程序配置文件存储:ConfigMap

ConfigMap主要用于存储配置文件

创建ConfigMap之后,数据会存储在k8s的etcd中,通过创建pod来使用该数据

Pod使用ConfigMap数据有两种方式:

- 变量注入

- 数据卷挂载

资源清单

ConfigMap有两种数据类型

- 键值对

- 多行数据

1 | # cat config-demo.yaml |

验证

1 | # kubectl exec -it configmap-demo-pod -- sh |

敏感数据存储:Secret

Secret与ConfigMap类似,主要用于存储敏感数据、敏感信息等,所有数据都要经过base64编码

应用场景:凭证信息

kubectl create secret支持三种数据类型

- docker-registr:存储镜像仓库认证信息

- generic:从文件、目录或者字符串创建,例如存储用户名、密码

- tls:存储证书,例如HTTPS证书

资源清单

1 | 将用户名密码先进行base64编码 |

1 | # cat secret-demo.yaml |

验证

1 | # kubectl exec -it secret-demo-pod -- sh |